Merlin Kafka

How AI is creating a flywheel for Design

Exploring product concepts used to take weeks to months. At Sequence, we now prototype ten different concepts in a single day. Using AI, we can now systematically explore a larger solution space than was previously economical.

With a four-person design team at Sequence, we've landed on a systematic approach to quickly explore a broad range of ideas. Below, we’re sharing our learnings and insights so you can try them in your own teams.

Previously: Time constraints

Up until now, testing and exploring ideas used to take time. In a fast-moving startup, this creates inherent trade-offs. You often don’t have the time to explore the entire solution space or test more risky concepts.

Most product teams end up:

- Choosing safer, more predictable features over experimental ones

- Debating concepts in Google Docs rather than building real prototypes

- Building the first reasonable idea instead of finding the best one

Our "Promptotyping" process

We’ve leveled up our design process to include a step we’ve started to call “Promptotyping” - starting with a carefully crafted prompt, then iterating through dozens of variations to discover the right mental model.

Here’s how it works:

Step 1: Write the experimental prompt

Instead of jumping into Figma, we start by writing a detailed prompt that captures:

- The core user problem we're solving.

- The mental model we want to test (as opposed to specific UI).

- Key interactions and user flows.

- Relevant domain context. Often a one-pager, PRD summary, or customer feedback to give the AI context about our product and the problem space we’re in.

This prompt becomes our experimental hypothesis.

Step 2: Generate 10+ variations

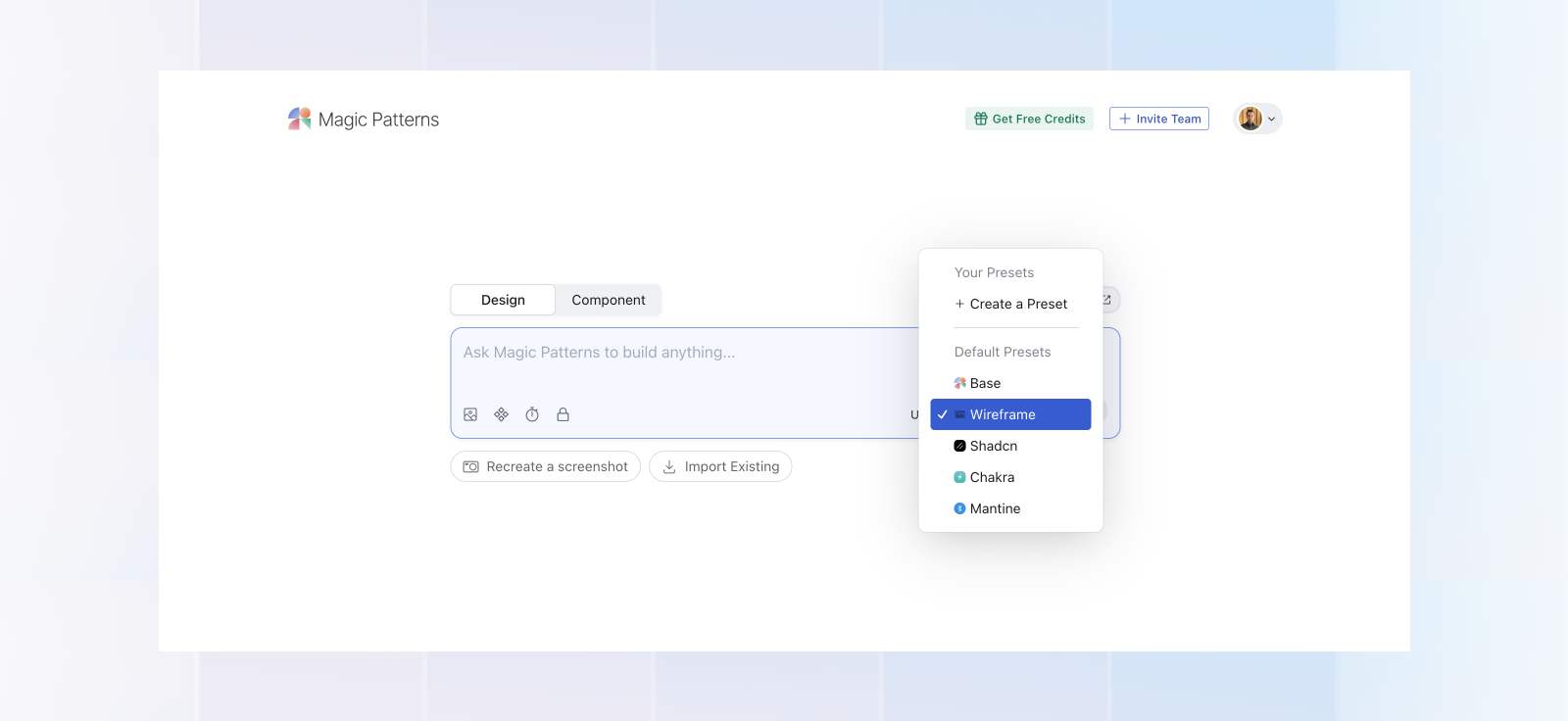

Using Claude Artifacts, ChatGPT, Lovable and Magic Patterns, we generate ten different prototypes using variations of the same original prompt.

We remix prompts by forcing the LLM to approach the problem from different perspectives (e.g. "redesign this as if you were Notion,"). The goal is to get creative and generate concepts beyond conventional thinking.

Each generation explores different solution paths we might have previously explored manually.

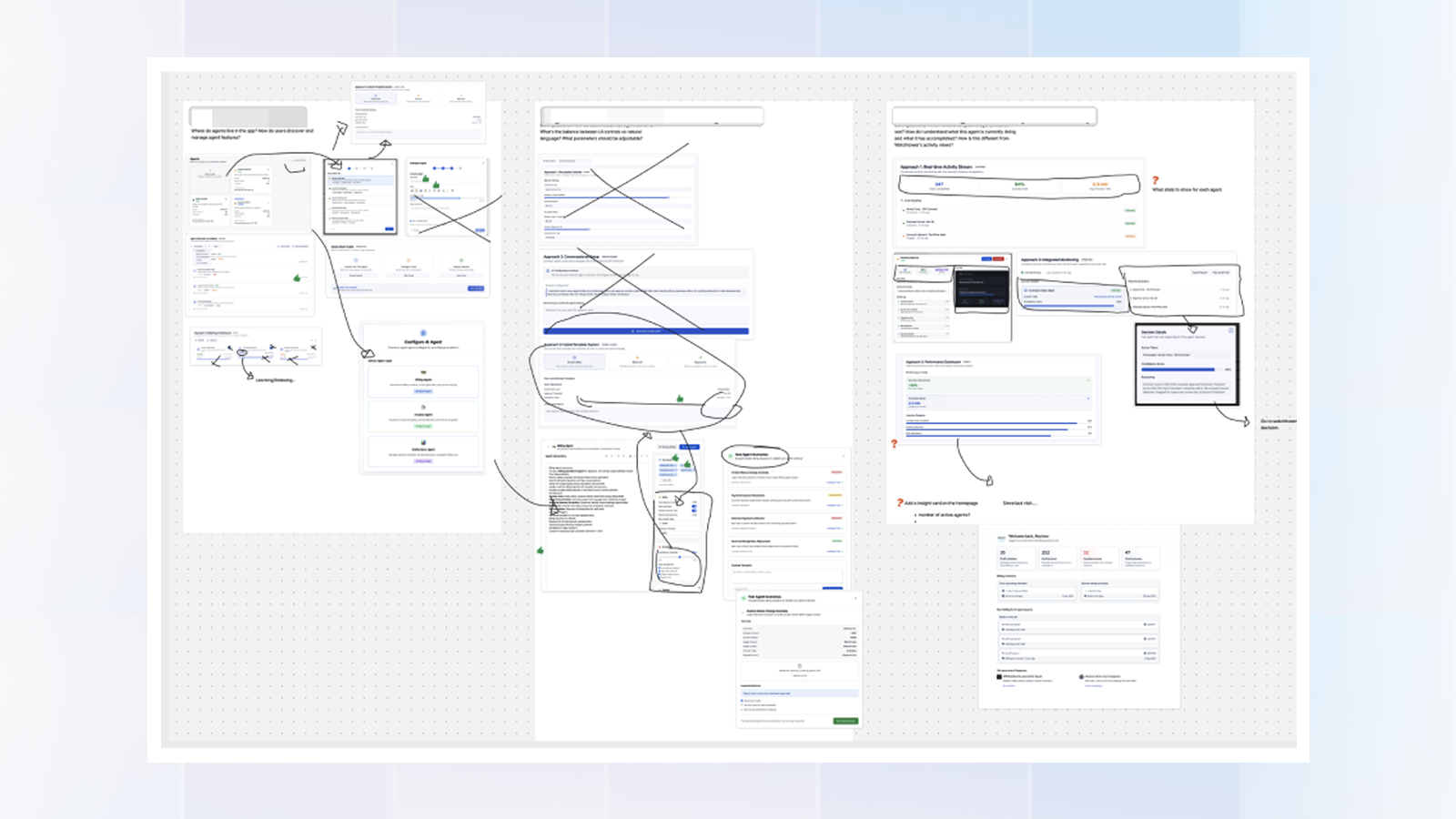

Step 3: Rapid evaluation and synthesis

Next, we assemble all prototypes on a canvas, highlighting what works and what doesn't. Then we synthesize the best elements into a new prompt and generate another round. This way, we can quickly form a mental model of what works and what doesn’t.

Step 4: Rapid customer feedback

Once we've converged on a promising direction, we can immediately share the working prototype with customers via a link

This way ideas can get tested quickly, and customer feedback influences the concept while it's still malleable.

Why low-fidelity prototypes work better

We intentionally keep our ‘promptotypes’ unbranded and visually rough. Generic fonts, basic colors, placeholder content. Magic Patterns even has a ‘Wireframe’ mode that forces prototypes to stay simple.

We’ve found that overly polished prototypes create an anchoring bias. It’s easy to fall in love with specific visual implementations rather than focusing on whether the underlying concept actually solves the user's problem.

The goal is to answer "does this solve my problem?" rather than "how does this look?".

What we learned about mental models

Most products or features that don’t end up solving the customer’s problem are mental model failures. In other words, the product model doesn’t fit the user and domain model in the real world. Users reject features not because they're poorly designed, but because the underlying mental model doesn't match how they think about the problem.

By rapidly prototyping a large variety of concepts, we can build a stronger intuition for what model is the best fit for a given problem we’re solving.

What we want to try next

Our prototyping lab is still evolving. Here are some experiments we're planning:

Multi-concept testing: Instead of sharing one prototype at a time, we want to create single links that let customers try multiple approaches to the same problem. This would let us test comparative preference rather than just individual concept validation.

Embedded feedback loops: We're exploring ways to let customers drop feedback directly into prototypes. Comments on specific elements, quick polls, or reaction buttons. This would eliminate the friction between "trying the prototype" and "giving feedback."

Merlin Kafka

Related articles

Sequence + Invoice Butler: Close the loop from billing to cash

Modern billing platforms have solved quote-to-cash complexity. Usage-based pricing, multi-year ramps, custom contract terms—Sequence handles all of it. But for most finance teams, the work doesn't end when an invoice is finalized. It ends when the payment hits the bank. That's where Invoice Butler comes in. With our new integration, Sequence customers can now automate the entire path from contract to cash. No manual handoffs between billing and collections. No invoices sitting in portals waiting for someone to upload them. No finance team members chasing down payments that should have arrived weeks ago.

Donal McKeon

Sequence + Rillet: The Modern Finance Stack

The AI-native finance stack is forming, and next-gen ERPs like Rillet are at the center of it. Companies with established systems, such as NetSuite and Stripe Billing, are choosing to build their finance operations on Sequence for quote-to-cash and Rillet as their AI-native general ledger. With our new native integration, these systems now work together seamlessly. This matters because Sequence handles what legacy billing systems can't: multi-year ramped pricing, backdated contracts, percentage-based pricing, flexible usage minimums, mid-contract co-terms, and every other contract variation sales teams actually close. When you pair that flexibility with Rillet's AI-native ERP, finance teams finally get both: the ability to bill any contract accurately and the intelligence to close books in hours instead of weeks.

Killian Cahill

2025 in Review

2025 was our strongest year yet - driven by 10× revenue growth, a $20m Series A, alongside 60+ brand new customer-facing product launches.

Killian Cahill